Note: These tutorials are incomplete. More complete versions are being made available for our members. Sign up for free.

Economics of Large-Scale Computing

Planning

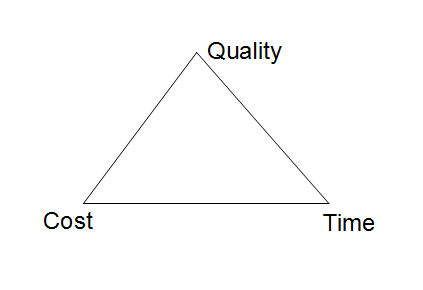

Financial planning for a large-scale computational project is difficult, because the manager needs to optimize three variables - quality of solution, cost and time. As an example, in case of genome assembly, it is possible to derive a low quality assembly by running a de Bruijn graph based assembler on one value of k-mer size, or it is possible to derive a higher quality assembly by checking multiple k-values. However, the second option takes much longer. On the other hand, higher quality solution can be achieved by running assembly for multiple k-values in parallel on different computers, but that pushes up the hardware cost. Overall, the manager needs to properly consider the following factors -

a) Cost of purchasing and upgrading the computing infrastructure b) Time to develop code c) Time for actual computation d) Energy consumption

Among those factors, the cost of purchasing hardware is paid at the beginning of the project and a wrong decision can be expensive. That often pushes managers to go for commodity hardware instead of custom hardware.

Commodity hardware vs expensive hardware

‘Commodity hardware’ is a new fashion popular among software programmers. It is a closed-minded approach that introduces inefficiency into programming. In an earlier incarnation of similar inefficiency in the early 90s, it was assumed that Microsoft or other companies could produce visually appealing programming interfaces to be programmed by ‘commodity programmers’.

Why not stick to one hardware and keep increasing number of machines to do more and more computing? Machine cost aside, power supply is a running cost that becomes too big with too many computers. Difficulty of replacing with hardware is cost of labor.