Note: These tutorials are incomplete. More complete versions are being made available for our members. Sign up for free.

Local Cluster, HPC, Condor and Cloud Computing

In terms of computing infrastructure, a researcher or group of researchers interested in processing large volume of genomic data can choose one of many options. Here we explain what popular terms mean, and the advantages and disadvantages for those modes of computing. Traditional sequencing centers have large computing resources. Therefore, finding computing ‘horsepower’ is not a big constraint for them. In contrast, small research labs working on NGS data often needs to find suitable computing resources within or outside their organization. This section is written to help them the pluses and minuses of various alternatives.

Computer Cluster

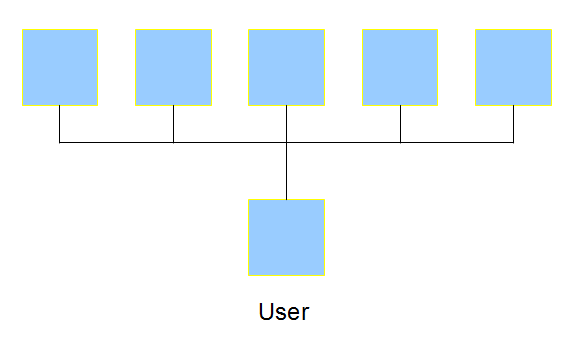

If a single server is not enough for rapid processing of available data, the easiest option is to purchase multiple powerful computers and connect them using a ethernet-based network. Such a system is called computer cluster. Usually those computers are almost identical, and one of them (head node) is picked for end-user interactions, while the rest are used for computing. The cluster usually implements openPBS or Torque-based system for easy submission of multiple computing tasks (‘jobs’) between them. Often the clusters also share their storage (hard-disk) through network.

HPC

A high-performance computing center (HPC) at an university or large organization has dedicated team of experts maintaining a much larger cluster than what a small group can have. The mode of operation is not much different from local clusters, except that the computing system is shared among many groups competing for high-performance computing.

Most major organizations have central high-performance computing centers. Typically the biologist needs to get an computing account in those centers and use their computers. The computers in such centers typically run multi-processor computers to run many programs in parallel. In addition, each node is composed of many cores.

Condor

Condor system is another way of achieving the same goal in a large organization, except that unlike HPC, Condor is not to have own set of computers. Instead Condor connects all personal computers in an organizations to allow their use, when the owner of the computers are not using them. By its nature, the system is highly heteregenous and user needs to use extra caution in specifying his job. It uses downtimes of all computers in the campus. Since the computers are running anyway.

Cloud Cloud-based systems takes the HPC concept to outside organizations. from other companies can be seen as a ‘global’ high-performance computing center. For a small fee, anyone can rent time in those computers, run a program and then quit. All data gets lost after completion of the run. Amazon also provides two other services – data storage and Hadoop.

Which mode of computing is better? The answer depends on many factors - (i) speed of computation, (ii) cost, (iii) data security, (iv) long-term reliabilty.

Is HPCC better than a remote cloud, because it is free? The answer depends on the power usage and hidden cost. Grant county - cheap power.

Privacy concerns from illegal action and arbitrary seizure by the governments has emerged to be a new threat to cloud computing. Loss of privacy due to government action still makes the company liable to its customers, and increases potential risk.